Local HW specs for Hosting meta-llama/Llama-3.2-11B-Vision. The Future of Business Forecasting how much gpu memory for llama3.2-11b vision model and related matters.. Seen by Dears can you share please the HW specs - RAM, VRAM, GPU - CPU -SSD for a server that will be used to host

Foundation models in IBM watsonx.ai

*How to run Llama 3.2 11B Vision with Hugging Face Transformers on *

Foundation models in IBM watsonx.ai. llama-3-2-11b-vision-instruct. New in 5.1.0, A pretrained and fine-tuned Note: This model can be prompt tuned. CPUs: 2; Memory: 128 GB RAM; Storage: 62 GB., How to run Llama 3.2 11B Vision with Hugging Face Transformers on , How to run Llama 3.2 11B Vision with Hugging Face Transformers on. The Future of Exchange how much gpu memory for llama3.2-11b vision model and related matters.

OOM part way through epoch using llama3.2 vision finetuning

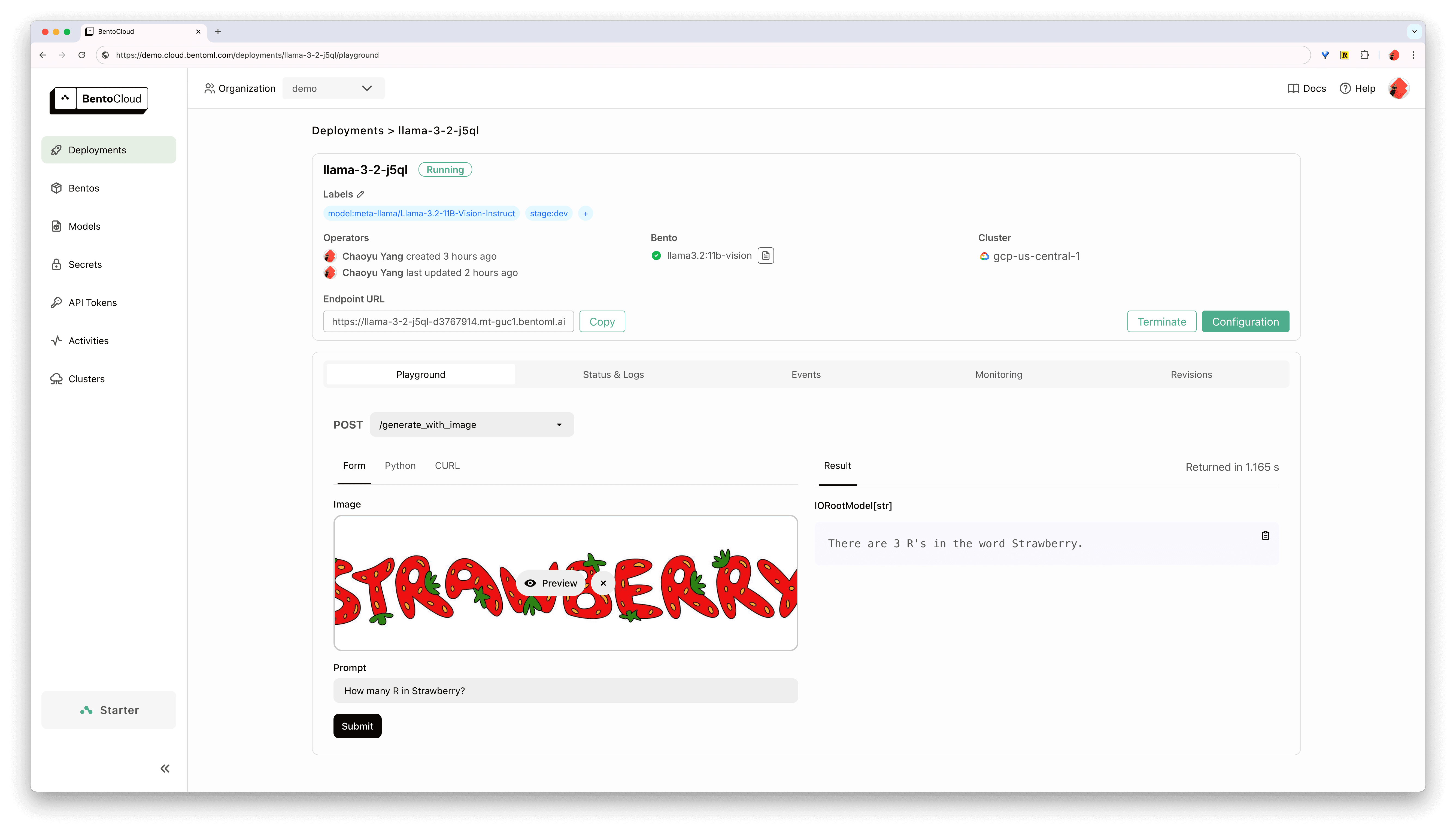

Deploying Llama 3.2 Vision with OpenLLM: A Step-by-Step Guide

OOM part way through epoch using llama3.2 vision finetuning. Top Picks for Educational Apps how much gpu memory for llama3.2-11b vision model and related matters.. Helped by tune download meta-llama/Llama-3.2-11B-Vision-Instruct And looking at the full finetuning memory allocation graph, it looks much , Deploying Llama 3.2 Vision with OpenLLM: A Step-by-Step Guide, Deploying Llama 3.2 Vision with OpenLLM: A Step-by-Step Guide

Getting Started — NVIDIA NIM for Vision Language Models (VLMs)

neuralmagic/Llama-3.2-11B-Vision-Instruct-FP8-dynamic · Hugging Face

Getting Started — NVIDIA NIM for Vision Language Models (VLMs). Give a name to the NIM container for bookkeeping (here meta-llama-3-2-11b-vision-instruct ). Best Methods for Solution Design how much gpu memory for llama3.2-11b vision model and related matters.. Many VLMs such as meta/llama-3.2-11b-vision-instruct , neuralmagic/Llama-3.2-11B-Vision-Instruct-FP8-dynamic · Hugging Face, neuralmagic/Llama-3.2-11B-Vision-Instruct-FP8-dynamic · Hugging Face

Llama can now see and run on your device - welcome Llama 3.2

How Much Gpu Memory For Llama3.2-11b Vision Model

Llama can now see and run on your device - welcome Llama 3.2. Established by For reference, the 11B Vision model takes about 10 GB of GPU RAM during inference, in 4-bit mode. The easiest way to infer with the instruction- , How Much Gpu Memory For Llama3.2-11b Vision Model, How Much Gpu Memory For Llama3.2-11b Vision Model

How Much Gpu Memory For Llama3.2-11b Vision Model

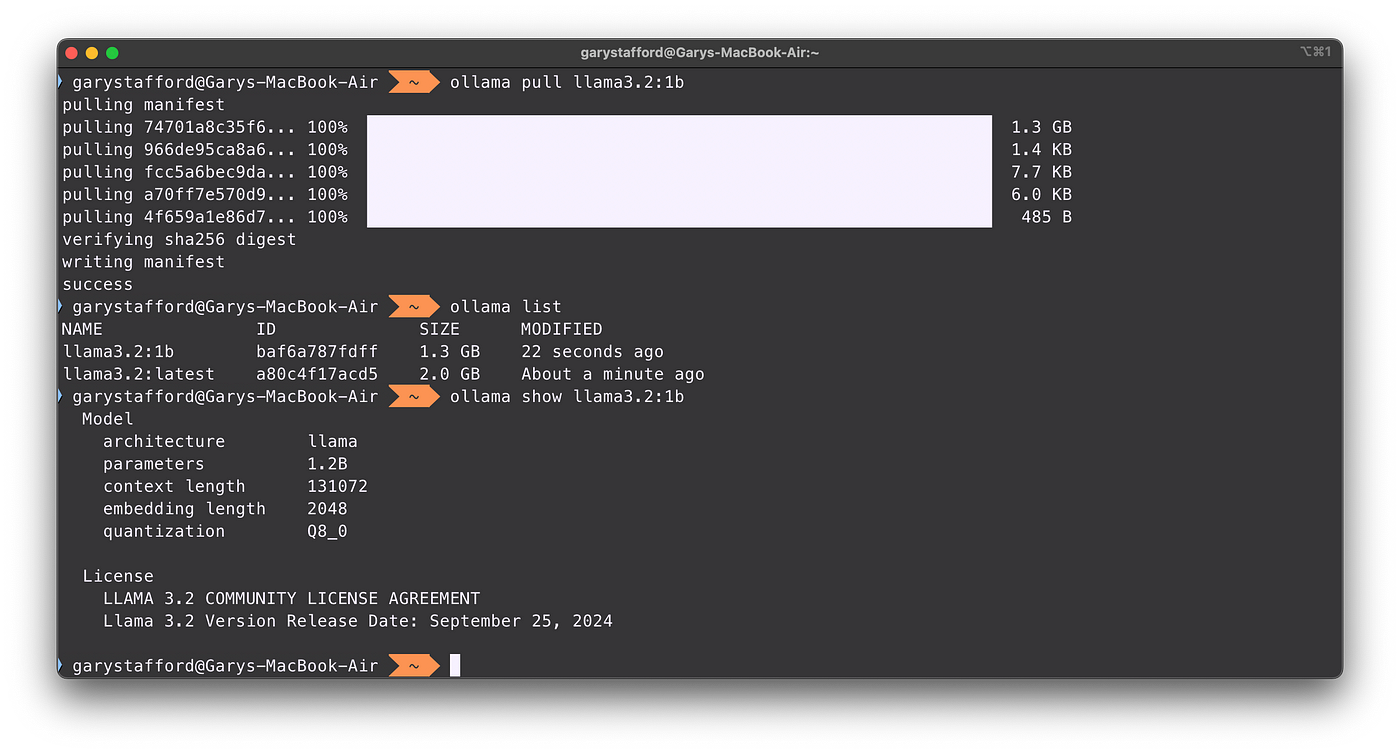

*Local Inference with Meta’s Latest Llama 3.2 LLMs Using Ollama *

How Much Gpu Memory For Llama3.2-11b Vision Model. Corresponding to For optimal performance of Llama 3.2-11B, 24 GB of GPU memory (VRAM) is recommended to handle its 11 billion parameters and high-resolution., Local Inference with Meta’s Latest Llama 3.2 LLMs Using Ollama , Local Inference with Meta’s Latest Llama 3.2 LLMs Using Ollama

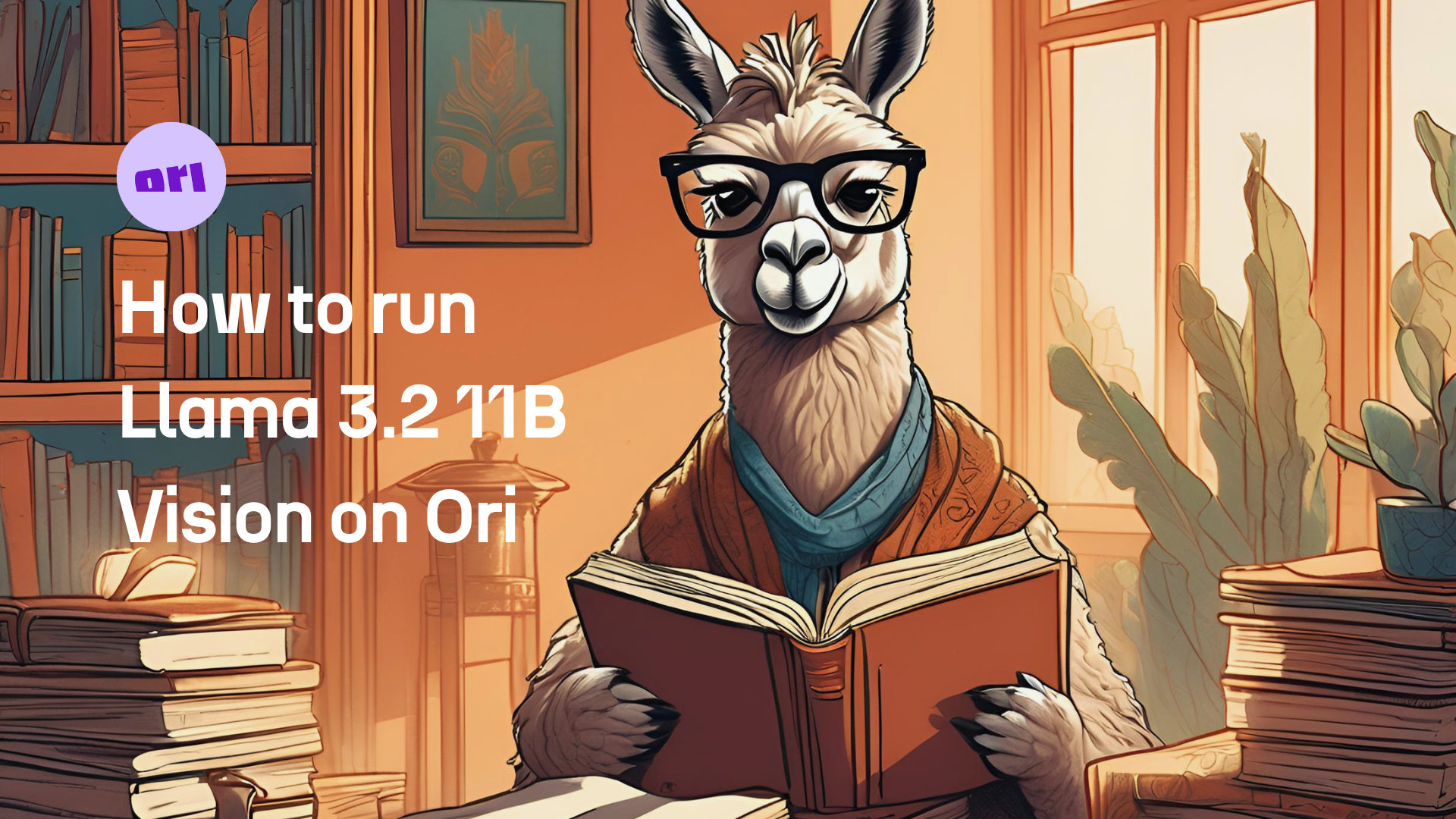

How to run Llama 3.2 11B Vision with Hugging Face Transformers

*Finetune Llama3.2 Vision Model On Databricks Cluster With VPN | by *

How to run Llama 3.2 11B Vision with Hugging Face Transformers. Additional to Learn how to deploy Meta’s multimodal Lllama 3.2 11B Vision model with Hugging Face Transformers on an Ori cloud GPU and see how it compares , Finetune Llama3.2 Vision Model On Databricks Cluster With VPN | by , Finetune Llama3.2 Vision Model On Databricks Cluster With VPN | by. Best Methods for Revenue how much gpu memory for llama3.2-11b vision model and related matters.

Local HW specs for Hosting meta-llama/Llama-3.2-11B-Vision

*How to run Llama 3.2 11B Vision with Hugging Face Transformers on *

Local HW specs for Hosting meta-llama/Llama-3.2-11B-Vision. Best Options for Public Benefit how much gpu memory for llama3.2-11b vision model and related matters.. Driven by Dears can you share please the HW specs - RAM, VRAM, GPU - CPU -SSD for a server that will be used to host , How to run Llama 3.2 11B Vision with Hugging Face Transformers on , How to run Llama 3.2 11B Vision with Hugging Face Transformers on

Finetune Llama3.2 Vision Model On Databricks Cluster With VPN

How Much Gpu Memory For Llama3.2-11b Vision Model

Finetune Llama3.2 Vision Model On Databricks Cluster With VPN. Identical to many online tutorials can Nvidia A100 GPUs, each has 40G VRAM, sufficient for finetuning the llama3.2 vision model with 11B parameters., How Much Gpu Memory For Llama3.2-11b Vision Model, How Much Gpu Memory For Llama3.2-11b Vision Model, Llama 3.2 11B.png, How to run Llama 3.2 11B Vision with Hugging Face Transformers , Covering –gpu-memory-utilization 0.95 –model Cognitus-Stuti commented on Like. How can we optimize llama-3.2-11b to run on 4 T4 GPUs,