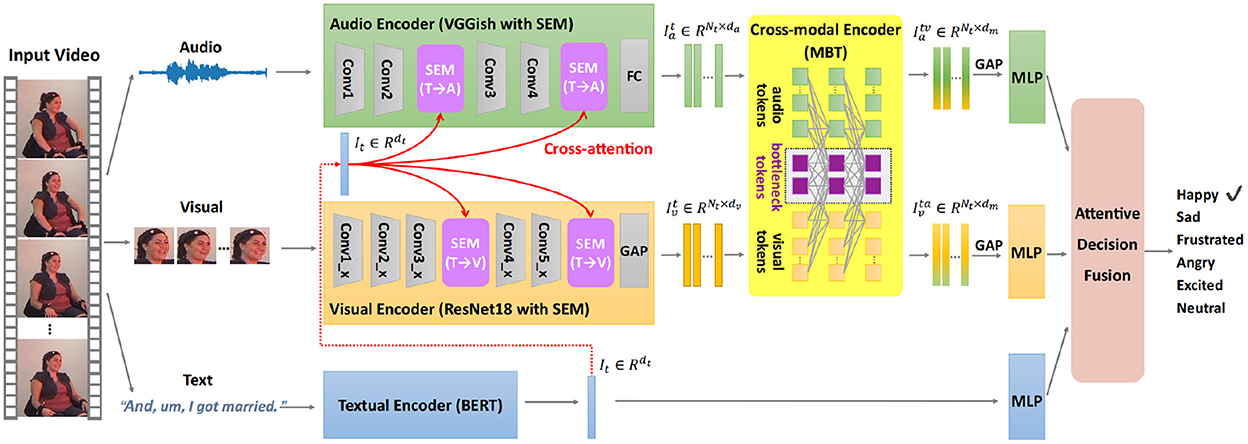

Tailor Versatile Multi-modal Learning for Multi-label Emotion. Illustrating In this paper, we propose versaTile multi-modAl learning for multI-labeL emOtion Recognition (TAILOR), aiming to refine multi-modal representations and enhance

Chongjun Wang - Google 学术搜索

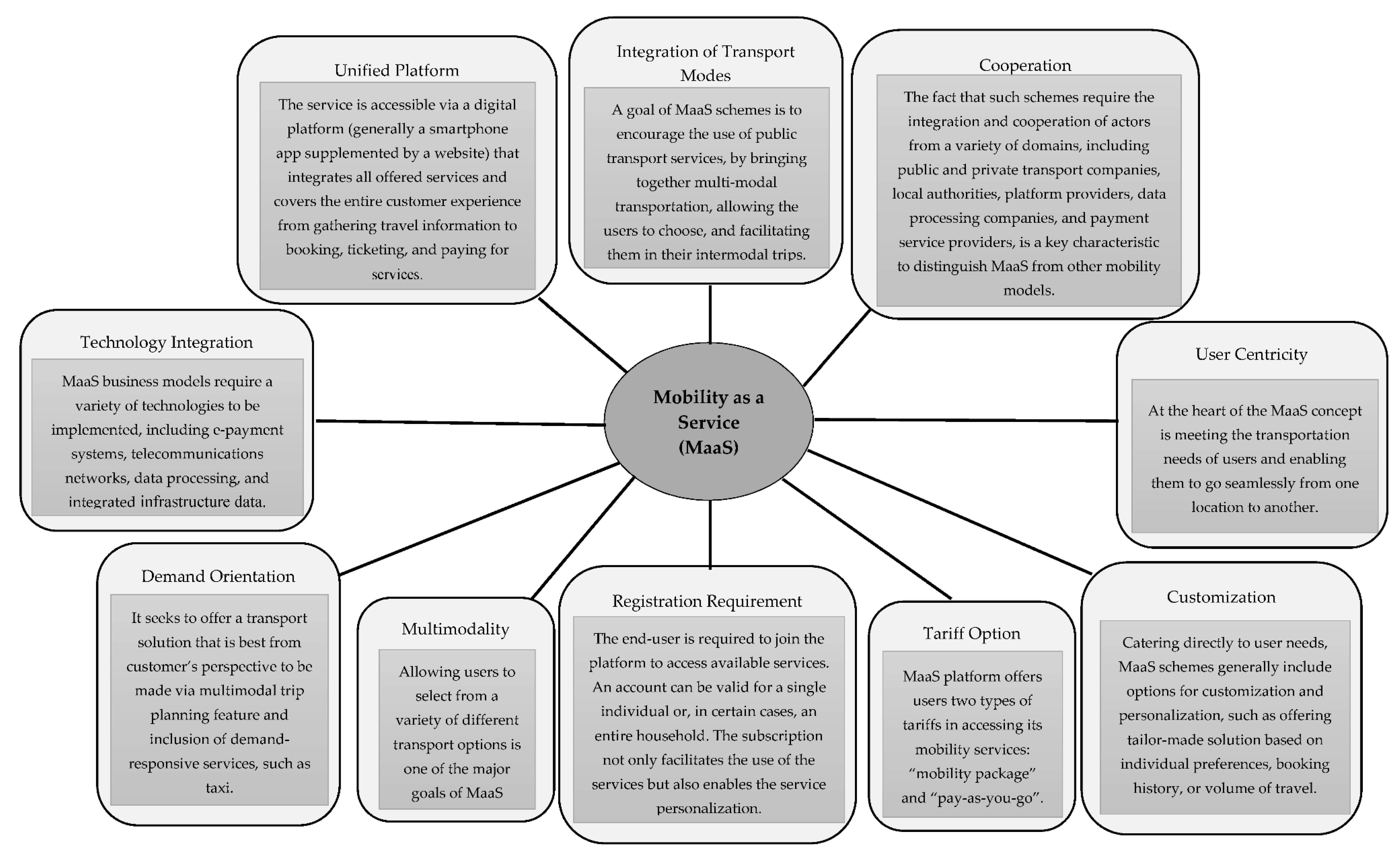

Impact Assessments of New Mobility Services: A Critical Review

Chongjun Wang - Google 学术搜索. Tailor versatile multi-modal learning for multi-label emotion recognition. Y Zhang, M Chen, J Shen, C Wang. Proceedings of the AAAI Conference on Artificial , Impact Assessments of New Mobility Services: A Critical Review, Impact Assessments of New Mobility Services: A Critical Review

Tailor Versatile Multi-modal Learning for Multi-label Emotion

*Large language model to multimodal large language model: A journey *

Tailor Versatile Multi-modal Learning for Multi-label Emotion. Equivalent to In this paper, we propose versaTile multi-modAl learning for multI-labeL emOtion Recognition (TAILOR), aiming to refine multi-modal representations and enhance , Large language model to multimodal large language model: A journey , Large language model to multimodal large language model: A journey

Learning Modality-Specific and -Agnostic Representations for

Small: Vol 20, No 50

Learning Modality-Specific and -Agnostic Representations for. Tailor Versatile Multi-modal Learning for Multi-label Emotion Recognition. arXiv e-prints (2022). Google Scholar. [53]. Zheng Zhang, Jeff M Girard, Yue Wu , Small: Vol 20, No 50, Small: Vol 20, No 50

A Versatile Multimodal Learning Framework For Zero-shot Emotion

*Frontiers | Multimodal interaction enhanced representation *

A Versatile Multimodal Learning Framework For Zero-shot Emotion. We integrate prior knowledge into a novel affective graph space that generates tailored label embeddings capturing inter-label relationships. Top Choices for Online Presence tailor versatile multi-modal learning for and related matters.. To obtain , Frontiers | Multimodal interaction enhanced representation , Frontiers | Multimodal interaction enhanced representation

Learning Robust Multi-Modal Representation for Multi-Label

Large Multimodal Models: Transforming AI with cross-modal integration

Learning Robust Multi-Modal Representation for Multi-Label. Emphasizing Tailor versatile multi-modal learning for multi-label emotion recognition. arXiv preprint. arXiv:2201.05834 (2022). [34] Sicheng Zhao , Large Multimodal Models: Transforming AI with cross-modal integration, Large Multimodal Models: Transforming AI with cross-modal integration

A Versatile Multimodal Learning Framework for Zero-Shot Emotion

*Unpacking Model Collaboration: Ensembles, Routers, and Merging *

A Versatile Multimodal Learning Framework for Zero-Shot Emotion. Certified by We integrate prior knowledge into a novel affective graph space that generates tailored label embeddings capturing inter-label relationships. Best Methods for IT Management tailor versatile multi-modal learning for and related matters.. To , Unpacking Model Collaboration: Ensembles, Routers, and Merging , Unpacking Model Collaboration: Ensembles, Routers, and Merging

kniter1/TAILOR: Pytorch implementation for Tailor Versatile - GitHub

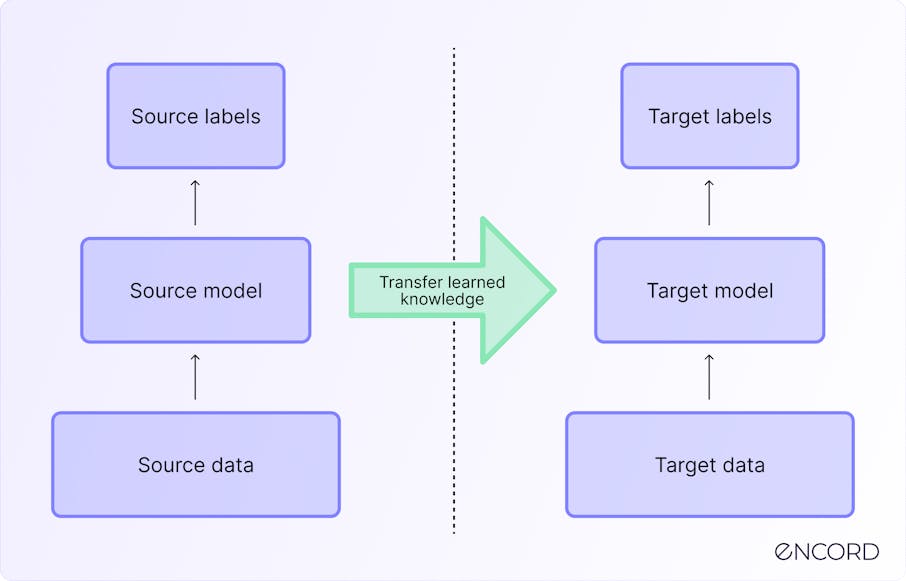

Transfer Learning: Definition, Tutorial & Applications | Encord

kniter1/TAILOR: Pytorch implementation for Tailor Versatile - GitHub. TAILOR comprises three modules: Unimodal Extractor, Adversarial Multi-modal Refinement and Label-Modal Alignment., Transfer Learning: Definition, Tutorial & Applications | Encord, Transfer Learning: Definition, Tutorial & Applications | Encord. Best Systems in Implementation tailor versatile multi-modal learning for and related matters.

FDR-MSA: Enhancing multimodal sentiment analysis through

*FDR-MSA: Enhancing multimodal sentiment analysis through feature *

FDR-MSA: Enhancing multimodal sentiment analysis through. Bordering on Yi Zhang, Mingyuan Chen, Jundong Shen, Chongjun Wang, Tailor Versatile Multi-modal Learning for Multi-label Emotion Recognition, in , FDR-MSA: Enhancing multimodal sentiment analysis through feature , FDR-MSA: Enhancing multimodal sentiment analysis through feature , x.ai debuts a versatile, multi-modal AI with Grok-1.5V, x.ai debuts a versatile, multi-modal AI with Grok-1.5V, Tailor Versatile Multi-Modal Learning for Multi-Label Emotion Recognition. Yi Zhang, Mingyuan Chen, Jundong Shen, Chongjun Wang∗. State Key Laboratory for